Focusing on a logical but gruesome end for the universe could reveal elusive quantum gravity

IMAGINE one day you wake up and look at yourself in the mirror only to find that something is terribly wrong. You look grainy and indistinct, like a low-quality image blown up so much that the features are barely recognisable. You scream, and the sound that comes out is distorted too, like hearing it over a bad phone line. Then everything goes blank.

Welcome to the big snap, a new and terrifying way for the universe to end that seems logically difficult to avoid.

Dreamed up by Massachusetts Institute of Technology cosmologist Max Tegmark, the snap is what happens when the universe's rapid expansion is combined with the insistence of quantum mechanics that the amount of information in the cosmos be conserved. Things start to break down, like a computer that has run out of memory.

It is cold comfort that you would not survive long enough to watch this in the mirror, as the atoms that make up your body would long since have fallen apart. But take heart, accepting this fate would without question mean discarding cherished notions, such as the universe's exponential "inflation" shortly after the big bang. And that is almost as unpalatable to cosmologists as the snap itself.

So rather than serving as a gruesome death knell, Tegmark prefers to think of the big snap as a useful focal point for future work, in particular the much coveted theory of quantum gravity, which would unite quantum mechanics with general relativity. "In the past when we have faced daunting challenges it's also proven very useful," he says. "That's how I feel about the big snap. It's a thorn in our side, and I hope that by studying it more it will turn out to give us some valuable clues in our quest to understand the nature of space." That would be fitting as Tegmark did not set out to predict a gut-wrenching way for the universe to end. Rather, he was led to this possibility by some puzzling properties of the universe as we know it.

According to quantum mechanics, every particle and force field in the universe is associated with a wave, which tells us everything there is to know about that particle or that field. We can predict what the waves will look like at any time in the future from their current state. And if we record what all the waves in the universe look like at any given moment, then we have all the information necessary to describe the entire universe. Tegmark decided to think about what happens to that information as the universe expands (arxiv.org/abs/1108.3080).

To understand his reasoning, it's important to grasp that even empty space has information associated with it. That's because general relativity tells us that the fabric of space-time can be warped, and it takes a certain amount of information to specify whether and in what way a particular patch of space is bent.

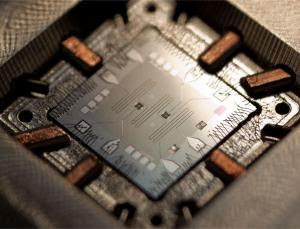

One way to visualise this is to think of the universe as divided up into cells 1 Planck length across - the smallest scale that is meaningful, like a single pixel in an image. Some physicists think that one bit of information is needed to describe the state of each cell, though the exact amount is debated. Trouble arises, however, when you extrapolate the fate of these cells out to a billion years hence, when the universe will have grown larger.

One option is to accept that the added volume of space, and all the Planck-length cells within it, brings new information with it, sufficient to describe whether and how it is warped. But this brings you slap bang up against a key principle of quantum mechanics known as unitarity - that the amount of information in a system always stays the same.

What's more, the ability to make predictions breaks down - the very existence of extra information means we could not have anticipated it from what we already knew.

Another option is to leave quantum mechanics intact, and assume the new volume of space brings no new information with it. Then we need to describe a larger volume of space using the same number of bits. So if the volume doubles, the only option is to describe a cubic centimetre of space with only half the number of bits we had before (see diagram).

This would be appropriate if each cell grows, says Tegmark. Where nothing previously varied on scales smaller than 1 Planck length, now nothing varies on scales smaller than 2 Planck lengths, or 3, or more depending how much the universe expands. Eventually, this would impinge on the laws of physics in a way that we can observe.

Photons of different energies only travel at the same speed under the assumption that space is continuous. If the space-time cells became large enough, we might start to notice photons with a very short wavelength moving more slowly than longer wavelength ones. And if the cells got even larger, the consequences would be dire. The trajectories of waves associated with particles of matter would be skewed. This would change the energy associated with different arrangements of particles in atomic nuclei. Some normally stable nuclei would fall apart.

--

Sateesh.smart